How to Choose Between Liquid Clustering and Partitioning with Z-Order in LakeHouse

The views expressed in this blog are my own and do not represent official guidance from Databricks.

Optimizing query performance is crucial in the realm of big data. Delta Lake, an open source storage layer, offers two primary methods for organizing data: liquid clustering and partitioning with Z-order. This blog post will help you navigate the decision-making process between these two approaches.

Understanding the Basics: Liquid Clustering vs. Partitioned Z-Order Tables

Clustering in Delta Lake enhances query performance by organizing data based on frequently accessed columns, similar to indexing in relational databases. The key difference is that clustering physically sorts the data within the table rather than creating separate index structures.

Liquid Clustering

Liquid clustering is a newer algorithm for Delta Lake tables, offering several advantages:

Flexibility: You can change clustering columns at any time.

Optimization for Unpartitioned Tables: It works well without partitioning.

Efficiency: It doesn’t re-cluster previously clustered files unless explicitly instructed.

Liquid clustering relies on optimistic concurrency control (OCC) to handle conflicts when multiple writes occur to the same table.

Partitioned Z-Order Tables

Partitioning combined with Z-ordering is a traditional approach that:

Control: Allows greater control over data organization.

Parallel Writes: Supports parallel writes more effectively.

Fine-Grained Optimization: Enables optimization of specific partitions.

However, data engineers must be aware of querying patterns upfront to choose an appropriate partition column.

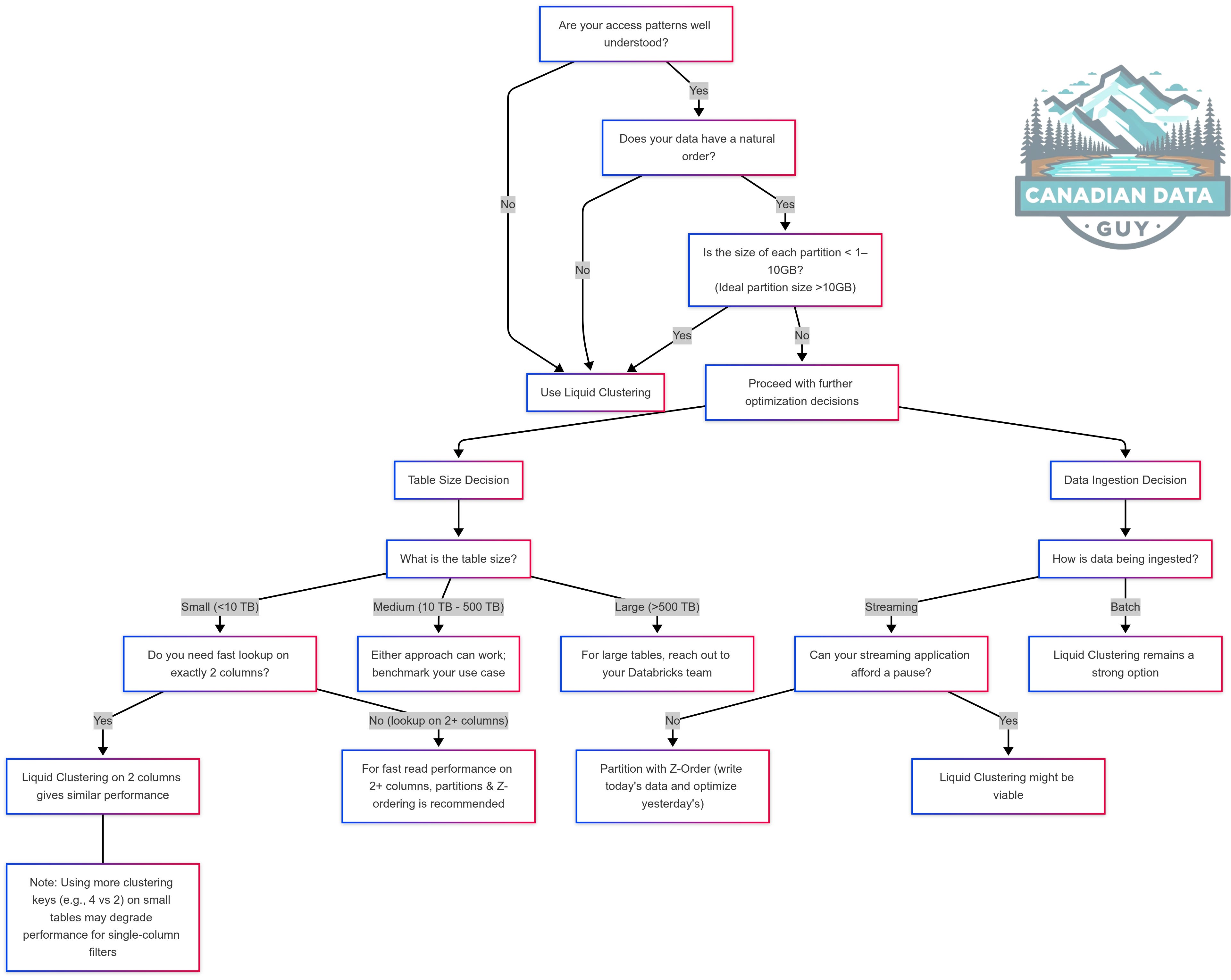

Decision Tree

Built in March 2025, this decision tree will be continuously updated as technology evolves. As new enhancements emerge, my understanding will grow, and this resource will be refined accordingly. This is a complex topic, but I will do my best to provide at least an intuitive grasp to help you develop a clearer understanding.

Factors to Consider When Choosing

Table Size

Small tables (< 10 TB): If you can liquid cluster on 2 columns both approaches might give you similar performance. *If you want fast read performance on 2+ columns, partitions and order might be the better approach.

Medium tables (10 TB -500TB): Either approach can work; consider doing a benchmark for your use case.

Large tables (> 500 TB): You should reach out to your Databricks representative and have a discussion.

Note: Liquid is being actively improved so the guidance could change

Query Patterns

If users consistently include the partition column in their queries, partitioning can be very effective.

Liquid clustering may be more suitable for more flexible query patterns where users may not always include the partition column.

Data Distribution

If you have uneven partition sizes, the liquid will be better.

Date-based data (e.g., clickstream data) often benefits from partitioning.

For data without a clear partitioning strategy, liquid clustering may be better.

Partition Column Selection

When choosing a partition column:

Select immutable columns (e.g., click date, sale date)

Avoid high-cardinality columns like timestamps

For timestamp data, create a derived date column for partitioning

Aim for fewer than 10,000 distinct partition values.

Each partition should contain at least ~1-10 GB of data.

Real-World Example: Amazon Clickstream Data

Let's consider a real-world scenario using Amazon's clickstream data:

The table stores 3 years of data for 10 countries

Partitioning by click date results in approximately 1,000 partitions (365 * 3)

10 countries * 1,000 date partitions = 10,000 total partitions

This setup is within the recommended partition count (< 10,000) and provides good control over the data. Here's how we might structure this table:

Partition by

click_date, countryZ-order by

merchant_id, andadvertiser_id

Optimizing the Partitioned Table

To maintain optimal performance, you can run a daily optimization job on the newest partition:

OPTIMIZE table_name

WHERE click_date = 'ANY_DATE' and country = 'CANADA'

ZORDER BY ( merchant_id, advertiser_id)

This approach ensures good performance for date-range queries and lookups on Z-ordered columns.

Optimistic Concurrency Control

Delta Lake uses optimistic concurrency control to manage parallel writes. Here's how it works:

Writers check the current version of the Delta table (e.g., version 100).

They attempt to write a new JSON file (e.g., 101.json).

Only one writer can succeed in creating this file.

The "losing" writer checks if there are conflicts with what was previously written.

If no conflicts, it creates the next version (e.g., 102.json).

This approach works well for appends but can be challenging for updates, especially when multiple writers are trying to modify the same files.

Potential Pitfalls and Best Practices

Here are some key considerations and common mistakes to avoid:

Do not add Co-related columns to liquid: If two columns are highly correlated, you only need to include one of them as a clustering key. Example, if you have click_date, click_timestamp then only cluster by click_timestamps

Skip meaningless keys: When it comes to clustering, try to avoid using meaningless keys such as UUIDs, which are inheritable and unsortable strings. If possible, refrain from using them in both liquid and z-order clustering. However, I understand that sometimes customers require quick lookups on these UUID columns. In those cases, you may include them.

Over-Partitioning: A common mistake is creating too many partitions. While partitioning helps with performance, too many partitions can result in overhead. A good rule of thumb is to keep partition counts under 10,000. For example, if you're storing three years of daily click data, partitioning by

click_datewould result in around 1,000 partitions for three years—well within the 10,000-partition guideline. Example: Avoid partitioning on high cardinality columns (e.g., timestamps). This would result in too many partitions, leading to performance degradation. Instead, partition on a date column and ensure it has enough data per partition.Schedule Optimization: Regularly run the OPTIMIZE command.

For partitioned tables, ensure new partitions are clustered and Z-ordered for efficient querying. Including the WHERE clause is important because the Zorder algorithm has a limitation: it can cluster files that were already clustered.

For Liquid Clustered tables, life is simple; run optimize at a cadence, and it will not recluster files it has clustered before. Every 2-24 hours should be enough; depends on how much GB you are producing per hour.

Conclusion

Choosing between liquid clustering and partitioned Z-order tables depends on various factors including table size, write patterns, and query requirements. Always consider your specific use case and be prepared to test both approaches to determine the best fit for your data and query patterns. The right choice will significantly impact your query performance and overall data management efficiency.

Keep This Post Discoverable: Your Engagement Counts!

Your engagement with this blog post is crucial! Without claps, comments, or shares, this valuable content might become lost in the vast sea of online information. Search engines like Google rely on user engagement to determine the relevance and importance of web pages. If you found this information helpful, please take a moment to clap, comment, or share. Your action not only helps others discover this content but also ensures that you’ll be able to find it again in the future when you need it. Don’t let this resource disappear from search results — show your support and help keep quality content accessible!

References

https://docs.databricks.com/aws/en/delta/clustering

https://www.databricks.com/blog/2018/07/31/processing-petabytes-of-data-in-seconds-with-databricks-delta.html

https://docs.databricks.com/en/delta/clustering.html

https://www.databricks.com/blog/announcing-general-availability-liquid-clustering

QQ: In your example under section’ Parallel Write Considerations’ though the Kafka writers are writing concurrently they are just append only operations and so there won’t be conflicts with concurrent writers even if we decide to use liquid clustering right?

Let’s say I am writing to a destination table with less than 1TB of data